Labour promised tough new rules for the world’s leading AI developers. But as Trump returns, ministers are thinking again.

LONDON — On Tuesday evening tech leaders gathered at No. 10 Downing Street for drinks with Keir Starmer.

Over tea, scones and fizz, senior members of his British government enthused about the potential of artificial intelligence to revive the country’s stuttering economy and struggling public services.

But a promise made in the Labour Party’s election manifesto last summer to enforce safety rules on the most powerful AI models is now on the backburner following the election of Donald Trump and amid industry pushback, according to multiple insiders, many of whom were granted anonymity to speak freely.

Ministers planned to start a consultation on an AI regulation bill last fall. It was ready to go, but it is now earmarked for “spring” as civil servants work out how to redraft it to keep the U.S. administration and tech companies onside.

Up until the time of the U.S. election, Labour ministers repeatedly said the bill would force firms developing frontier AI models to follow rules which they committed to voluntarily at the AI Safety Summit in Bletchley Park in 2023. That included giving Britain’s AI Safety Institute (AISI) access to test the latest models before they’re released, something which only three companies have done so far voluntarily.

But thinking inside the U.K. government has changed, according to four people familiar with the consultation. Ministers have dropped language about forcing AI companies to give the AISI pre-release access for testing, which was also the subject of industry resistance.

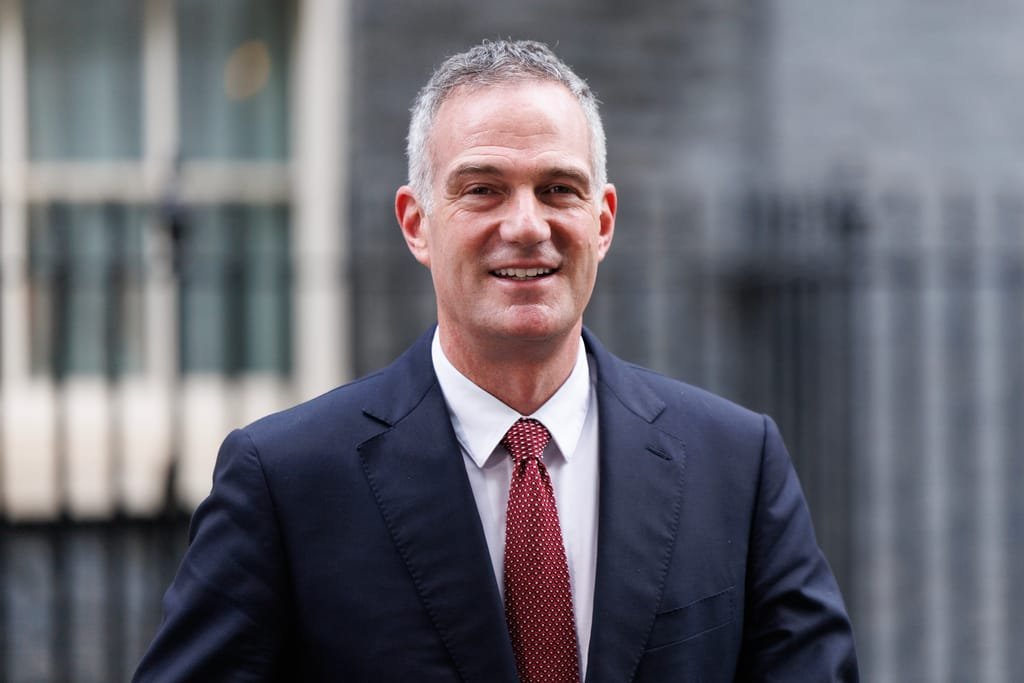

The last time the country’s technology secretary Peter Kyle mentioned it publicly was the morning after Trump was elected. A fifth person said: “I think there is too much resistance. Even under the Democrats would the U.S. accept mandatory testing of its companies? That was never really resolved.”

Enter Trump

One of Trump’s first moves after entering office was to revoke an executive order from Joe Biden on AI safety, putting a major question mark over the future of the U.S. version of the AI Safety Institute, which gave the U.K. some cover to test the models of American tech firms. Its director and other senior leaders have stepped down.

One industry representative, briefed on government plans, said: “There is real nervousness in Whitehall about where the U.S. administration will go on AI safety. Labour is still trying to work out how to deal with the administration. They want to test out the temperature and find a consensus with the U.S. before going into a consultation.”

The latest big name to warn about enforcing safety tests was LinkedIn co-founder Reid Hoffman who told POLITICO’s Power Play podcast moving ahead would be “unwise.”

A spokesperson for Republican senator Ted Cruz, the chair of the powerful Senate Commerce Committee, accused Biden’s AI policies of “imposing censorship diktats and governmental barriers to innovation disguised as safety measures. »

But the U.K.’s Technology Secretary Peter Kyle argued that revoking the executive order would not affect the work of Britain’s AISI because it was about domestic matters. He said relationships between AI safety institutes would “adapt over time.”

A DSIT spokesperson added the government “remained fully committed” to legislation and it would be brought “as parliamentary timetables allow.” But they confirmed the proposals are being reviewed.

“We are continuing to engage extensively to refine our proposals and will launch a public consultation in due course to ensure our approach is fit for purpose and can keep pace with the technology’s rapid development,” the spokesperson added.

Slow down

Kirsty Innes, director of technology at Labour Together, a think tank close to the Labour leadership, said ministers should not rush into legislating.

“I think getting to grips with AI is going to be a whole-of-government project for the whole mandate and they already have plenty underway,” she said. “There will of course need to be some new legislation on AI, but they will want to go into that with a very clear and specific idea of what needs to be done via an AI bill and what can be tackled elsewhere.”

The Tony Blair Institute, a think tank which has led much of the Labour government’s thinking on the technology, has also warned about the practicalities of an AI bill.

Britain’s AI Action Plan, written by tech investor Matt Clifford, didn’t call for AI legislation, arguing instead that safety concerns should be dealt with by giving more money to existing regulators, expanding the AISI and developing an AI assurance market.

Launching that plan in January, Starmer struck a cautious tone on legislation. “We will test and understand AI before we regulate it to make sure that when we do it, it is proportionate and grounded in the science,” he said.

Clifford is now advising the government, alongside Demis Hassabis, the CEO of Google DeepMind, who said he supports “nimble” regulation.

“What we would have discussed, perhaps regulating five years ago, is not what we would try to regulate today,” he said. “What I would suggest, which is difficult, is a kind of nimble, adaptable approach to regulation.”

Elizabeth Seger, director of digital policy at think tank Demos, said: “Trying to find a nice middle ground between the EU and the U.S. quickly and efficiently is very difficult. Then you have to square that with the aim of the AI Opportunities Plan which is to attract industry.”

She called on the government to present the bill as part of a wider package on AI which takes account of concerns outside of frontier safety, such as how to fund the regulators and public sector use of the technology.

Next stop, Paris

The U.K.’s rethink comes as world leaders and tech executives travel to Paris this weekend for an Emmanuel Macron-hosted AI summit following on the back of U.K.-organized safety summits at England’s Bletchley Park and in Seoul, South Korea.

But this time safety is taking a back seat to “inclusive and sustainable” AI.

Starmer is sending his tech secretary, Kyle, in his place, while a draft of the declaration, which countries are expected to sign, only commits to further monitoring previous voluntary safety commitments, rather than introducing new ones.

The AISI will also be showing off its capabilities in Paris, publishing the results of cybersecurity tests on non-English language models. Demonstrating British leadership there, rather than leading on legislating, could be the way to keep the U.S. and Trump onside.

Sam Hammond, senior economist at the Foundation for American Innovation, said: “I expect continuity (between Trump and Biden) on core issues of national security, such as retaining capacity to evaluate models for severe risks.”

Secretary of State Marco Rubio backed this up at his confirmation hearing, stating the AUKUS military alliance between the U.S., U.K. and Australia could be a “blueprint” for an AI partnership between the Western allies.

Commerce Secretary Howard Lutnick, the man now with ultimate responsibility for the U.S. AISI, wouldn’t commit to its future at his confirmation hearing last week, but gave clear support for the government’s role in setting technical standards for AI, citing cybersecurity standards as a model to follow.

Former U.K. AISI staffer Herbie Bradley said it would be “wise” for the British safety institute to focus on AI security and work closely with the U.S. through networks like the Five Eyes intelligence alliance.

“They should think more about the governance of AI applications in military and national security,” he said last month.

Kyle, meanwhile, said he expected the role of AI safety institutes to pivot to security. “As AI evolves and there’s more national security related issues that might come from it, then of course, you’re going to see governments around the world starting to use the language and adapt to those particular challenges via their institutes,” he said.

Expect the U.K. to position the AISI, which has built up an early global lead on safety testing, as its latest national security offer to the U.S.

Suzanne Lynch, Pieter Haeck and Mohar Chatterjee contributed to this report.